16.9.8. Using Virtuoso Crawler

Using Virtuoso Crawler (which includes the Sponger options so you crawl non-RDF but get RDF and this can go to the Quad Store).

Example:

-

Go to Conductor UI. For ex. at http://example.com/conductor :

Figure 16.86. Using Virtuoso Crawler

-

Enter admin user credentials:

Figure 16.87. Using Virtuoso Crawler

-

Go to tab Web Application Server:

Figure 16.88. Using Virtuoso Crawler

-

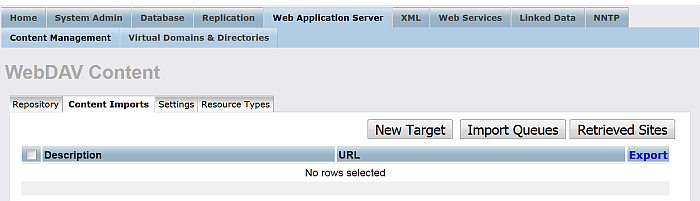

Go to tab Content Imports:

Figure 16.89. Using Virtuoso Crawler

-

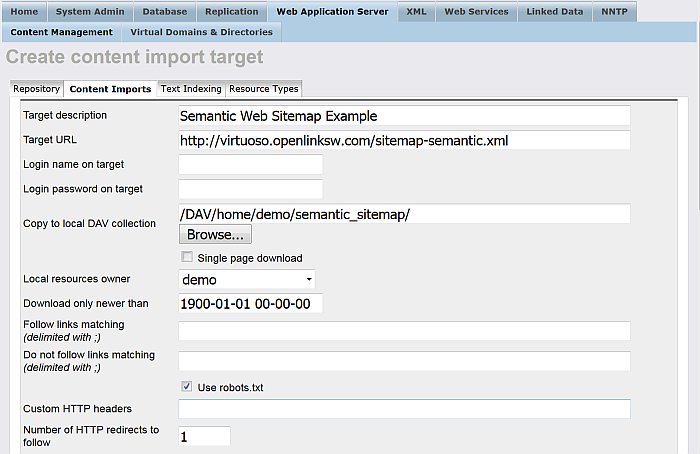

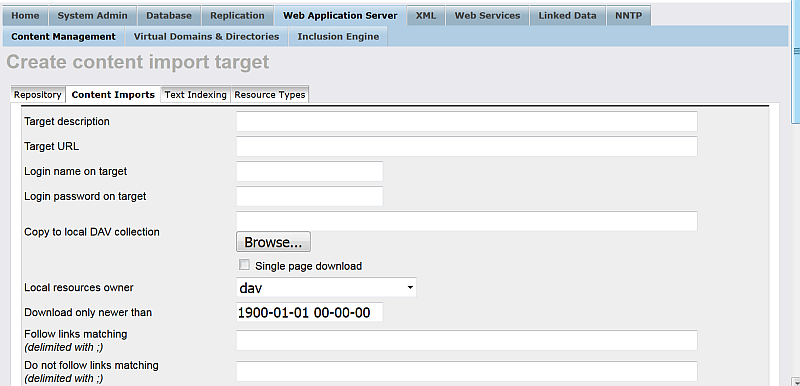

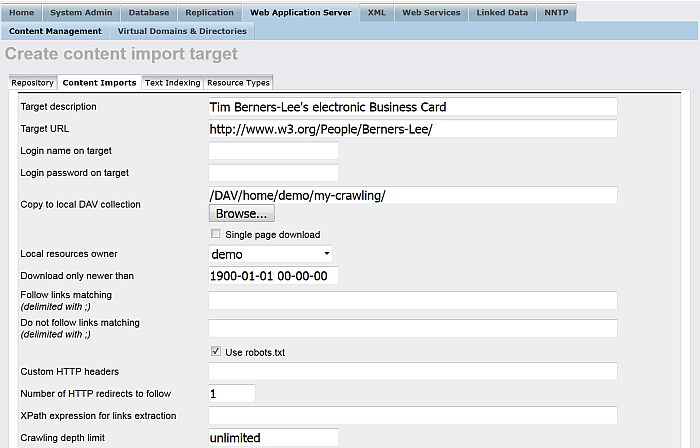

Click the "New Target" button:

Figure 16.90. Using Virtuoso Crawler

-

In the shown form set respectively:

-

"Target description": Tim Berners-Lee's electronic Business Card

-

"Target URL": http://www.w3.org/People/Berners-Lee/ ;

-

"Copy to local DAV collection " for ex.: /DAV/home/demo/my-crawling/ ;

-

Choose from the list "Local resources owner": demo ;

-

Leave checked by default the check-box "Store documents locally". -- Note: if "Store document locally" is not checked, then in this case no documents will be save as DAV resource and the specified DAV folder from above will not be used ;

-

Check the check-box with label "Store metadata" ;

-

Specify which cartridges to be involved by hatching their check-box ;

-

Note: when selected "Convert Link", then all HREFs in the local stored content will be relative.

Figure 16.91. Using Virtuoso Crawler

Figure 16.92. Using Virtuoso Crawler

-

-

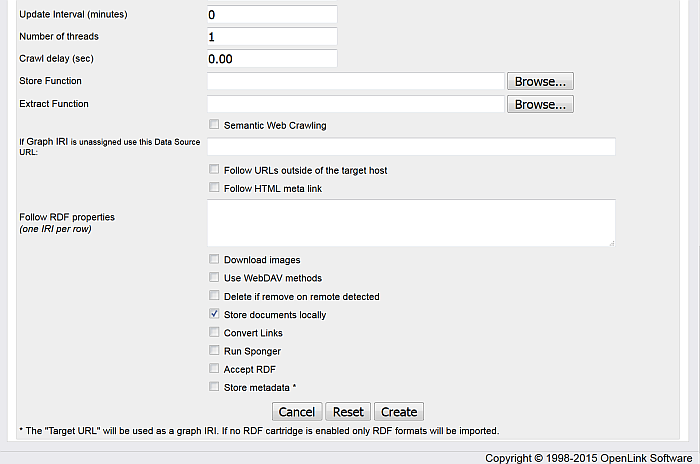

Click the button "Create":

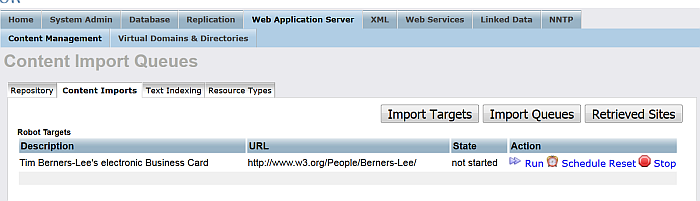

Figure 16.93. Using Virtuoso Crawler

-

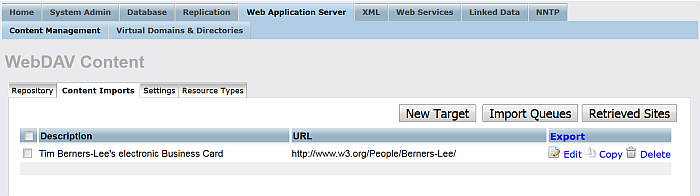

Click the button "Import Queues":

Figure 16.94. Using Virtuoso Crawler

-

For "Robot target" with label "Tim Berners-Lee's electronic Business Card" click "Run".

-

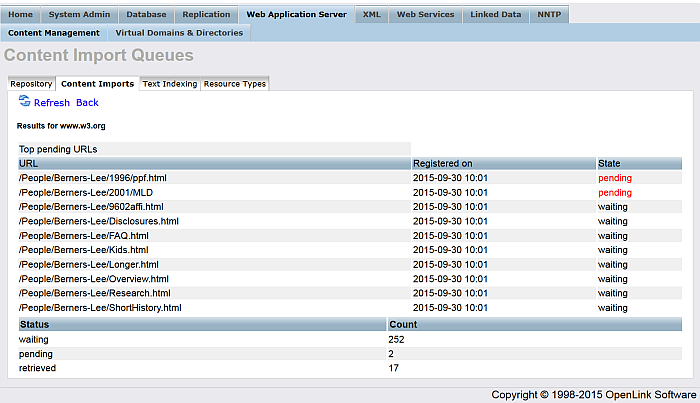

As result should be shown the number of the pages retrieved.

Figure 16.95. Using Virtuoso Crawler

Example: Use of schedular to interface Virtuoso Quad Store with PTSW using the following program:

create procedure PTSW_CRAWL ()

{

declare xt, xp any;

declare content, headers any;

content := http_get ('http://pingthesemanticweb.com/export/', headers);

xt := xtree_doc (content);

xp := xpath_eval ('//rdfdocument/@url', xt, 0);

foreach (any x in xp) do

{

x := cast (x as varchar);

dbg_obj_print (x);

{

declare exit handler for sqlstate '*' {

log_message (sprintf ('PTSW crawler can not load : %s', x));

};

sparql load ?:x into graph ?:x;

update DB.DBA.SYS_HTTP_SPONGE set HS_LOCAL_IRI = x, HS_EXPIRATION = null WHERE HS_LOCAL_IRI = 'destMD5=' || md5 (x) || '&graphMD5=' || md5 (x);

commit work;

}

}

}

;

insert soft SYS_SCHEDULED_EVENT (SE_SQL, SE_START, SE_INTERVAL, SE_NAME)

values ('DB.DBA.PTSW_CRAWL ()', cast (stringtime ('0:0') as DATETIME), 60, 'PTSW Crawling');

![[Tip]](images/tip.png)