16.10.9. Sponger Programmers Guide

The Sponger forms part of the extensible RDF framework built into Virtuoso Universal Server. A key component of the Sponger's pluggable architecture is its support for Sponger Cartridges, which themselves are comprised of an Entity Extractor and an Ontology Mapper. Virtuoso bundles numerous pre-written cartridges for RDF data extraction from a wide range of data sources. However, developers are free to develop their own custom cartridges. This programmer's guide describes how.

The guide is a companion to the Virtuoso Sponger whitepaper. The latter describes the Sponger in depth, its architecture, configuration, use and integration with other Virtuoso facilities such as the Open Data Services (ODS) application framework. This guide focuses solely on custom cartridge development.

Configuration of CURIEs used by the Sponger

For configuring CURIEs used by the Sponger which is exposed via Sponger

clients such as "description.vsp" - the VSP based information resource description utility,

you can use the xml_set_ns_decl

function.

Here is sample example to add curie pattern:

-- Example link: http://linkeddata.uriburner.com/about/rdf/http://twitter.com/guykawasaki/status/1144945513#this

XML_SET_NS_DECL ('uriburner',

'http://linkeddata.uriburner.com/about/rdf/http://',

2);

Cartridge Architecture

The Sponger is comprised of cartridges which are themselves comprised of an entity extractor and an ontology mapper. Entities extracted from non-RDF resources are used as the basis for generating structured data by mapping them to a suitable ontology. A cartridge is invoked through its cartridge hook, a Virtuoso/PL procedure entry point and binding to the cartridge's entity extractor and ontology mapper.

Entity Extractor

When an RDF aware client requests data from a network accessible resource via the Sponger the following events occur:

-

A request is made for data in RDF form (explicitly via HTTP Accept Headers), and if RDF is returned nothing further happens.

-

If RDF isn't returned, the Sponger passes the data through a

Entity Extraction Pipeline

(using Entity Extractors).

-

The extracted data is transformed into RDF via a

Mapping Pipeline

. RDF instance data is generated by way of ontology matching and mapping.

-

RDF instance data (aka. RDF Structured Linked Data) are returned to the client.

Extraction Pipeline

Depending on the file or format type detected at ingest, the Sponger applies the appropriate entity extractor. Detection occurs at the time of content negotiation instigated by the retrieval user agent. The normal extraction pipeline processing is as follows:

-

The Sponger tries to get RDF data (including N3 or Turtle) directly from the dereferenced URL. If it finds some, it returns it, otherwise, it continues.

-

If the URL refers to a HTML file, the Sponger tries to find "link" elements referring to RDF documents. If it finds one or more of them, it adds their triples into a temporary RDF graph and continues its processing.

-

The Sponger then scans for microformats or GRDDL. If either is found, RDF triples are generated and added to a temporary RDF graph before continuing.

-

If the Sponger finds eRDF or RDFa data in the HTML file, it extracts it from the HTML file and inserts it into the RDF graph before continuing.

-

If the Sponger finds it is talking with a web service such as Google Base, it maps the API of the web service with an ontology, creates triples from that mapping and includes the triples into the temporary RDF graph.

-

The next fallback is scanning of the HTML header for different Web 2.0 types or RSS 1.1, RSS 2.0, Atom, etc.

-

Failing those tests, the scan then uses standard Web 1.0 rules to search in the header tags for metadata (typically Dublin Core) and transform them to RDF and again add them to the temporary graph. Other HTTP response header data may also be transformed to RDF.

-

If nothing has been retrieved at this point, the ODS-Briefcase metadata extractor is tried.

-

Finally, if nothing is found, the Sponger will return an empty graph.

Ontology Mapper

Sponger ontology mappers peform the the task of generating RDF instance data from extracted entities (non-RDF) using ontologies associated with a given data source type. They are typically XSLT (using GRDDL or an in-built Virtuoso mapping scheme) or Virtuoso/PL based. Virtuoso comes preconfigured with a large range of ontology mappers contained in one or more Sponger cartridges.

Cartridge Registry

To be recognized by the SPARQL engine, a Sponger cartridge must be registered in the Cartridge Registry by adding a record to the table DB.DBA.SYS_RDF_MAPPERS, either manually via DML, or more easily through Conductor, Virtuoso's browser-based administration console, which provides a UI for adding your own cartridges. (Sponger configuration using Conductor is described in detail later.) The SYS_RDF_MAPPERS table definition is as follows:

create table "DB"."DBA"."SYS_RDF_MAPPERS"

(

"RM_ID" INTEGER IDENTITY, -- cartridge ID. Determines the order of the cartridge's invocation in the Sponger processing chain

"RM_PATTERN" VARCHAR, -- a REGEX pattern to match the resource URL or MIME type

"RM_TYPE" VARCHAR, -- which property of the current resource to match: "MIME" or "URL"

"RM_HOOK" VARCHAR, -- fully qualified Virtuoso/PL function name

"RM_KEY" LONG VARCHAR, -- API specific key to use

"RM_DESCRIPTION" LONG VARCHAR, -- cartridge description (free text)

"RM_ENABLED" INTEGER, -- a 0 or 1 integer flag to exclude or include the cartridge from the Sponger processing chain

"RM_OPTIONS" ANY, -- cartridge specific options

"RM_PID" INTEGER IDENTITY,

PRIMARY KEY ("RM_PATTERN", "RM_TYPE")

);

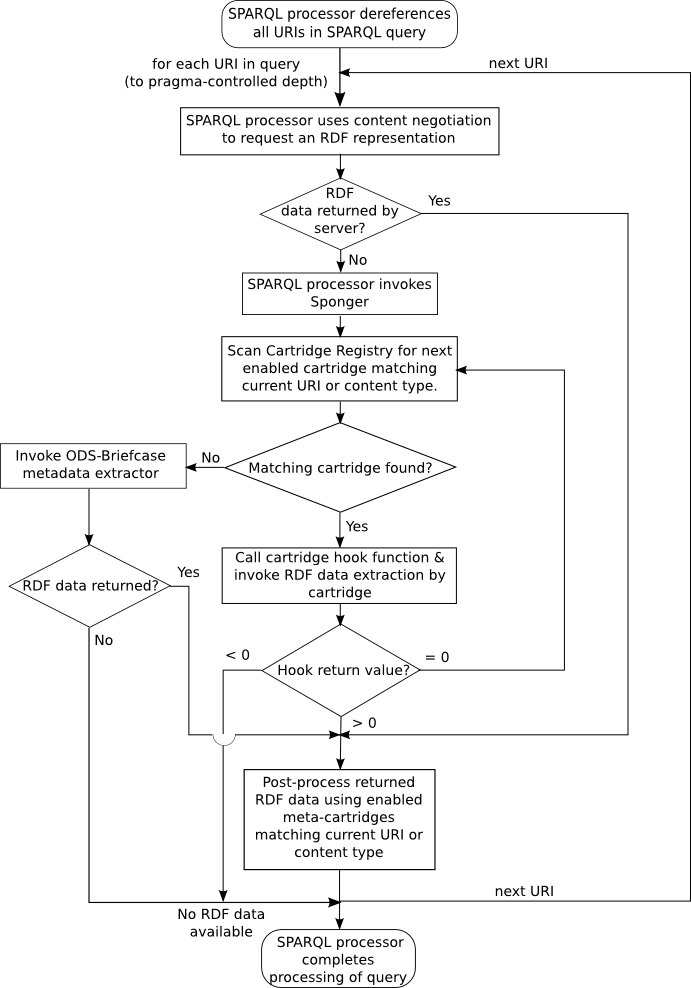

Cartridge Invocation

The Virtuoso SPARQL processor supports IRI dereferencing via the Sponger. If a SPARQL query references non-default graph URIs, the Sponger goes out (via HTTP) to Fetch the Network Resource data source URIs and inserts the extracted RDF data into the local RDF quad store. The Sponger invokes the appropriate cartridge for the data source type to produce RDF instance data. If none of the registered cartridges are capable of handling the received content type, the Sponger will attempt to obtain RDF instance data via the in-built WebDAV metadata extractor.

Sponger cartridges are invoked as follows:

When the SPARQL processor dereferences a URI, it plays the role of an HTTP user agent (client) that makes a content type specific request to an HTTP server via the HTTP request's Accept headers. The following then occurs:

-

If the content type returned is RDF then no further transformation is needed and the process stops. For instance, when consuming an (X)HTML document with a GRDDL profile, the profile URI points to a data provider that simply returns RDF instance data.

-

If the content type is not RDF (i.e. application/rdf+xml or text/rdf+n3 ), for instance 'text/plain', the Sponger looks in the Cartridge Registry iterating over every record for which the RM_ENABLED flag is true, with the look-up sequence ordered on the RM_ID column values. For each record, the processor tries matching the content type or URL against the RM_PATTERN value and, if there is match, the function specified in RM_HOOK column is called. If the function doesn't exist, or signals an error, the SPARQL processor looks at next record.

-

If the hook returns zero, the next cartridge is tried. (A cartridge function can return zero if it believes a subsequent cartridge in the chain is capable of extracting more RDF data.)

-

If the result returned by the hook is negative, the Sponger is instructed that no RDF was generated and the process stops.

-

If the hook result is positive, the Sponger is informed that structured data was retrieved and the process stops.

-

-

If none of the cartridges match the source data signature (content type or URL), the ODS-Briefcase WebDAV metadata extractor and RDF generator is called.

Meta-Cartridges

The above describes the RDF generation process for 'primary' Sponger cartridges. Virtuoso also supports another cartridge type - a 'meta-cartridge'. Meta-cartridges act as post-processors in the cartridge pipeline, augmenting entity descriptions in an RDF graph with additional information gleaned from 'lookup' data sources and web services. Meta-cartridges are described in more detail in a later section.

Figure 16.104. Meta-Cartridges

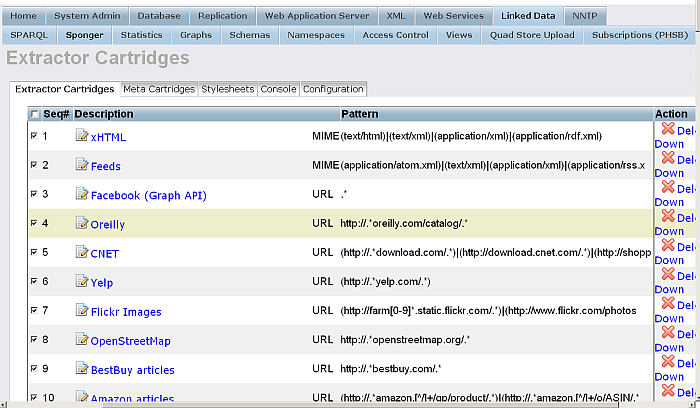

Cartridges Bundled with Virtuoso

Cartridges VAD

Virtuoso supplies a number of prewritten cartridges for extracting RDF data from a variety of popular Web resources and file types. The cartridges are bundled as part of the cartridges_dav VAD (Virtuoso Application Distribution).

To see which cartridges are available, look at the 'Linked Data' screen in Conductor. This can be reached through the Linked Data -> Sponger -> Extractor Cartridges and Meta Cartridges menu items.

Figure 16.105. RDF Cartridges

To check which version of the cartridges VAD is installed, or to upgrade it, refer to Conductor's 'VAD Packages' screen, reachable through the 'System Admin' > 'Packages' menu items.

The latest VADs for the closed source releases of Virtuoso can be downloaded from the downloads area on the OpenLink website. Select either the 'DBMS (WebDAV) Hosted' or 'File System Hosted' product format from the 'Distributed Collaborative Applications' section, depending on whether you want the Virtuoso application to use WebDAV or native filesystem storage. VADs for Virtuoso Open Source edition (VOS) are available for download from the VOS Wiki.

Example Source Code

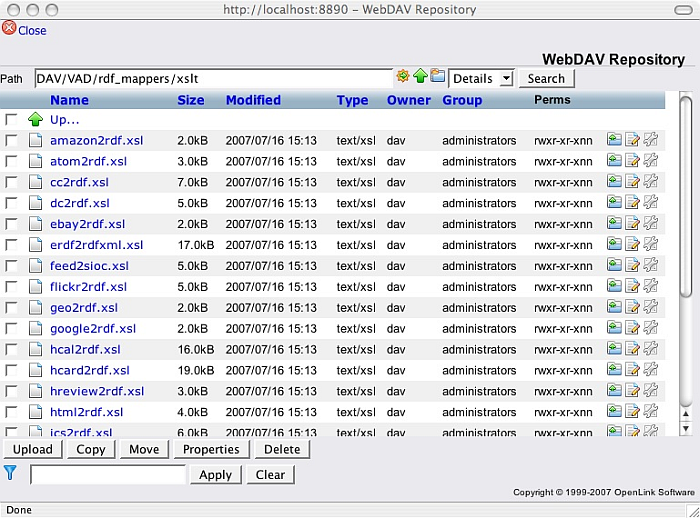

For developers wanting example cartridge code, the most authoritative reference is the cartridges VAD source code itself. This is included as part of the VOS distribution. After downloading and unpacking the sources, the script used to create the cartridges, and the associated stylesheets can be found in:

-

<vos root>/binsrc/rdf_mappers/rdf_mappers.sql

-

<vos root>/binsrc/rdf_mappers/xslt/*.xsl

Alternatively, you can look at the actual cartridge implementations installed in your Virtuoso instance by inspecting the cartridge hook function used by a particular cartridge. This is easily identified from the 'Cartridge name' field of Conductor's 'RDF Cartridges' screen, after selecting the cartridge of interest. The hook function code can be viewed from the 'Schema Objects' screen under the 'Database' menu, by locating the function in the 'DB' > 'Procedures' folder. Stylesheets used by the cartridges are installed in the WebDAV folder DAV/VAD/cartridges/xslt. This can be explored using Conductor's WebDAV interface. The actual rdf_mappers.sql file installed with your system can also be found in the DAV/VAD/cartridges folder.

Custom Cartridge

Virtuoso comes well supplied with a variety of Sponger cartridges and GRDDL filters. When then is it necessary to write your own cartridge?

In the main, writing a new cartridge should only be necessary to generate RDF from a REST-style Web service not supported by an existing cartridge, or to customize the output from an existing cartridge to your own requirements. Apart from these circumstances, the existing Sponger infrastructure should meet most of your needs. This is particularly the case for document resources.

Document Resources

We use the term document resource to identify content which is not being returned from a Web service. Normally it can broadly be conceived as some form of document, be it a text based entity or some form of file, for instance an image file.

In these cases, the document either contains RDF, which can be extracted directly, or it holds metadata in a supported format which can be transformed to RDF using an existing filter.

The following cases should all be covered by the existing Sponger cartridges:

-

embedded or linked RDF

-

RDFa, eRDF and other popular microformats extractable directly or via GRDDL

-

popular syndication formats (RSS 2.0 , Atom, OPML , OCS , XBEL)

GRDDL

GRDDL (Gleaning Resource Descriptions from Dialects of Languages) is mechanism for deriving RDF data from XML documents and in particular XHTML pages. Document authors may associate transformation algorithms, typically expressed in XSLT, with their documents to transform embedded metadata into RDF.

The cartridges VAD installs a number of GRDDL filters for transforming popular microformats (such as RDFa, eRDF or hCalendar) into RDF. The available filters can be viewed, or configured, in Conductor's 'GRDDL Filters for XHTML' screen. Navigate to the 'RDF Cartridges' screen using the 'RDF' > 'RDF Cartridges' menu items, then SELECT the 'GRDDL Mappings' tab to display the 'GRDDL Filters for XHTML' screen. GRDDL filters are held in the WebDAV folder /DAV/VAD/rdf_cartridges/xslt/ alongside other XSLT templates. The Conductor interface allows you to add new GRDDL filters should you so wish.

For an introduction to GRDDL, try the GRDDL Primer . To underline GRDDL's utility, the primer includes an example of transforming Excel spreadsheet data, saved as XML, into RDF.

A comprehensive list of stylesheets for transforming HTML and non-HTML XML dialects is maintained on the ESW Wiki. The list covers a range of microformats, syndication formats and feedlists.

To see which Web Services are already catered for, view the list of cartridges in Conductor's 'RDF Cartridges' screen.

Creating Custom Cartridges

The Sponger is fully extensible by virtue of its pluggable cartridge architecture. New data formats can be fetched by creating new cartridges. While OpenLink is active in adding cartridges for new data sources, you are free to develop your own custom cartridges. Entity extractors can be built using Virtuoso PL, C/C++, Java or any other external language supported by Virtuoso's Server Extension API. Of course, Virtuoso's own entity extractors are written in Virtuoso PL.

The Anatomy of a Cartridge

Cartridge Hook Prototype

Every Virtuoso PL hook function used to plug a custom Sponger cartridge into the Virtuoso SPARQL engine must have a parameter list with the following parameters (the names of the parameters are not important, but their order and presence are):

-

in graph_iri varchar

: the IRI of the graph being retrieved/crawled

-

in new_origin_uri varchar

: the URL of the document being retrieved

-

in dest varchar

: the destination/target graph IRI

-

inout content any

: the content of the retrieved document

-

inout async_queue any

: if the PingService initialization parameter has been configured in the [SPARQL] section of the virtuoso.ini file, this is a pre-allocated asynchronous queue to be used to call the ping service

-

inout ping_service any

: the URL of a ping service, as assigned to the PingService parameter in the [SPARQL] section of the virtuoso.ini configuration file. PingTheSemanticWeb is an example of a such a service.

-

inout api_key any

: a string value specific to a given cartridge, contained in the RC_KEY column of the DB.DBA.SYS_RDF_CARTRIDGES table. The value can be a single string or a serialized array of strings providing cartridge specific data.

-

inout opts any

: cartridge specific options held in a Virtuoso/PL vector which acts as an array of key-value pairs.

Return Value

If the hook procedure returns zero the next cartridge will be tried. If the result is negative the sponging process stops, instructing the SPARQL engine that nothing was retrieved. If the result is positive the process stops, this time instructing the SPARQL engine that RDF data was successfully retrieved.

If your cartridge should need to test whether other cartridges are configured to handle a particular data source, the following extract taken from the RDF_LOAD_CALAIS hook procedure illustrates how you might do this:

if (xd is not null)

{

-- Sponging successful. Load network resource data being fetched in the Virtuoso Quad Store:

DB.DBA.RM_RDF_LOAD_RDFXML (xd, new_origin_uri, coalesce (dest, graph_iri));

flag := 1;

}

declare ord any;

ord := (SELECT RM_ID FROM DB.DBA.SYS_RDF_MAPPERS WHERE

RM_HOOK = 'DB.DBA.RDF_LOAD_CALAIS');

for SELECT RM_PATTERN FROM DB.DBA.SYS_RDF_MAPPERS WHERE

RM_ID > ord and RM_TYPE = 'URL' and RM_ENABLED = 1 ORDER BY RM_ID do

{

if (regexp_match (RM_PATTERN, new_origin_uri) is not null)

-- try next candidate cartridge

flag := 0;

}

return flag;

Specifying the Target Graph

Two cartridge hook function parameters contain graph IRIs, graph_iri and dest. graph_iri identifies an input graph being crawled. dest holds the IRI specified in any input:grab-destination pragma defined to control the SPARQL processor's IRI dereferencing. The pragma overrides the default behaviour and forces all retrieved triples to be stored in a single graph, irrespective of their graph of origin.

So, under some circumstances depending on how the Sponger has been invoked and whether it is being used to crawl an existing RDF graph, or derive RDF data from a non-RDF data source, dest may be null.

Consequently, when loading network resource being fetched as RDF data into the quad store, cartridges typically specify the graph to receive the data using the coalesce function which returns the first non-null parameter. e.g.

DB.DBA.RDF_LOAD_RDFXML (xd, new_origin_uri, coalesce (dest, graph_iri));

Here xd is an RDF/XML string holding the fetched RDF.

Specifying & Retrieving Cartridge Specific Options

The hook function prototype allows cartridge specific data to be passed to a cartridge through the RM_OPTIONS parameter, a Virtuoso/PL vector which acts as a heterogeneous array.

In the following example, two options are passed, 'add-html-meta' and 'get-feeds' with both values set to 'no'.

insert soft DB.DBA.SYS_RDF_MAPPERS (

RM_PATTERN, RM_TYPE, RM_HOOK, RM_KEY, RM_DESCRIPTION, RM_OPTIONS

)

values (

'(text/html)|(text/xml)|(application/xml)|(application/rdf.xml)',

'MIME', 'DB.DBA.RDF_LOAD_HTML_RESPONSE', null, 'xHTML',

vector ('add-html-meta', 'no', 'get-feeds', 'no')

);

The RM_OPTIONS vector can be handled as an array of key-value pairs using the get_keyword function. get_keyword performs a case sensitive search for the given keyword at every even index of the given array. It returns the element following the keyword, i.e. the keyword value.

Using get_keyword, any options passed to the cartridge can be retrieved using an approach similar to that below:

create procedure DB.DBA.RDF_LOAD_HTML_RESPONSE (

in graph_iri varchar, in new_origin_uri varchar, in dest varchar,

inout ret_body any, inout aq any, inout ps any, inout _key any,

inout opts any )

{

declare get_feeds, add_html_meta;

...

get_feeds := add_html_meta := 0;

if (isarray (opts) and 0 = mod (length(opts), 2))

{

if (get_keyword ('get-feeds', opts) = 'yes')

get_feeds := 1;

if (get_keyword ('add-html-meta', opts) = 'yes')

add_html_meta := 1;

}

...

XSLT - The Fulchrum

XSLT is the fulchrum of all OpenLink supplied cartridges. It provides the most convenient means of converting structured data extracted from web content by a cartridge's Entity Extractor into RDF.

Virtuoso's XML Infrastructure & Tools

Virtuoso's XML support and XSLT support are covered in detail in the on-line documentation. Virtuoso includes a highly capable XML parser and supports XPath, XQuery, XSLT and XML Schema validation.

Virtuoso supports extraction of XML documents from SQL datasets. A SQL long varchar, long xml or xmltype column in a database table can contain XML data as text or in a binary serialized format. A string representing a well-formed XML entity can be converted into an entity object representing the root node.

While Sponger cartridges will not normally concern themselves with handling XML extracted from SQL data, the ability to convert a string into an in-memory XML document is used extensively. The function xtree_doc(string) converts a string into such a document and returns a reference to the document's root. This document together with an appropriate stylesheet forms the input for the transformation of the extracted entities to RDF using XSLT. The input string to xtree_doc generally contains structured content derived from a web service.

Virtuoso XSLT Support

Virtuoso implements XSLT 1.0 transformations as SQL callable functions. The xslt() Virtuoso/PL function applies a given stylesheet to a given source XML document and returns the transformed document. Virtuoso provides a way to extend the abilities of the XSLT processor by creating user defined XPath functions. The functions xpf_extension() and xpf_extension_remove() allow addition and removal of XPath extension functions.

General Cartridge Pipeline

The broad pipeline outlined here reflects the steps common to most cartridges:

-

Redirect from the requested URL to a Web service which returns XML

-

Stream the content into an in-memory XML document

-

Convert it to the required RDF/XML, expressed in the chosen ontology, using XSLT

-

Encode the RDF/XML as UTF-8

-

Load the RDF/XML into the quad store

The MusicBrainz cartridge typifies this approach. MusicBrainz is a community music metadatabase which captures information about artists, their recorded works, and the relationships between them. Artists always have a unique ID, so the URL http://musicbrainz.org/artist/4d5447d7-c61c-4120-ba1b-d7f471d385b9.html takes you directly to entries for John Lennon.

If you were to look at this page in your browser, you would see that the information about the artist contains no RDF data. However, the cartridge is configured to intercept requests to URLs of the form http://musicbrainz.org/([^/]*)/([^.]*) and redirect to the cartridge to Fetch all the available information on the given artist, release, track or label.

The cartridge extracts entities by redirecting to the MusicBrainz XML Web Service using as the basis for the initial query the item ID, e.g. an artist or label ID, extracted from the original URL. Stripped to its essentials, the core of the cartridge is:

webservice_uri := sprintf ('http://musicbrainz.org/ws/1/%s/%s?type=xml&inc=%U',

kind, id, inc);

content := RDF_HTTP_URL_GET (webservice_uri, '', hdr, 'GET', 'Accept: */*');

xt := xtree_doc (content);

...

xd := DB.DBA.RDF_MAPPER_XSLT (registry_get ('_cartridges_path_') || 'xslt/mbz2rdf.xsl', xt);

...

xd := serialize_to_UTF8_xml (xd);

DB.DBA.RM_RDF_LOAD_RDFXML (xd, new_origin_uri, coalesce (dest, graph_iri));

In the above outline, RDF_HTTP_URL_GET sends a query to the MusicBrainz web service, using query parameters appropriate for the original request, and retrieves the response using Network Resource Fetch.

The returned XML is parsed into an in-memory parse tree by xtree_doc. Virtuoso/PL function RDF_MAPPER_XSLT is a simple wrapper around the function xslt which sets the current user to dba before returning an XML document transformed by an XSLT stylesheet, in this case mbz2rdf.xsl. Function serialize_to_UTF8_xml changes the character set of the in-memory XML document to UTF8. Finally, RM_RDF_LOAD_RDFXML is a wrapper around RDF_LOAD_RDFXML which parses the content of an RDF/XML string into a sequence of RDF triples and loads them into the quad store. XSLT stylesheets are usually held in the DAV/VAD/cartridges/xslt folder of Virtuoso's WebDAV store. registry_get('cartridges_path') returns the Cartridges VAD path, 'DAV/VAD/cartridges', from the Virtuoso registry.

Error Handling with Exit Handlers

Virtuoso condition handlers determine the behaviour of a Virtuoso/PL procedure when a condition occurs. You can declare one or more condition handlers in a Virtuoso/PL procedure for general SQL conditions or specific SQLSTATE values. If a statement in your procedure raises an SQLEXCEPTION condition and you declared a handler for the specific SQLSTATE or SQLEXCEPTION condition the server passes control to that handler. If a statement in your Virtuoso/PL procedure raises an SQLEXCEPTION condition, and you have not declared a handler for the specific SQLSTATE or the SQLEXCEPTION condition, the server passes the exception to the calling procedure (if any). If the procedure call is at the top-level, then the exception is signaled to the calling client.

A number of different condition handler types can be declared (see the Virtuoso reference documentation for more details.) Of these, exit handlers are probably all you will need. An example is shown below which handles any SQLSTATE. Commented out is a debug statement which outputs the message describing the SQLSTATE.

create procedure DB.DBA.RDF_LOAD_SOCIALGRAPH (in graph_iri varchar, ...)

{

declare qr, path, hdr any;

...

declare exit handler for sqlstate '*'

{

-- dbg_printf ('%s', __SQL_MESSAGE);

return 0;

};

...

-- data extraction and mapping successful

return 1;

}

Exit handlers are used extensively in the Virtuoso supplied cartridges. They are useful for ensuring graceful failure when trying to convert content which may not conform to your expectations. The RDF_LOAD_FEED_SIOC procedure (which is used internally by several cartridges) shown below uses this approach:

-- /* convert the feed in rss 1.0 format to sioc */

create procedure DB.DBA.RDF_LOAD_FEED_SIOC (in content any, in iri varchar, in graph_iri varchar, in is_disc int := '')

{

declare xt, xd any;

declare exit handler for sqlstate '*'

{

goto no_sioc;

};

xt := xtree_doc (content);

xd := DB.DBA.RDF_MAPPER_XSLT (

registry_get ('_cartridges_path_') || 'xslt/feed2sioc.xsl', xt,

vector ('base', graph_iri, 'isDiscussion', is_disc));

xd := serialize_to_UTF8_xml (xd);

DB.DBA.RM_RDF_LOAD_RDFXML (xd, iri, graph_iri);

return 1;

no_sioc:

return 0;

}

Loading RDF into the Quad Store

RDF_LOAD_RDFXML & TTLP

The two main Virtuoso/PL functions used by the cartridges for loading RDF data into the Virtuoso quad store are DB.DBA.TTLP and DB.DBA.RDF_LOAD_RDFXML. Multithreaded versions of these functions, DB.DBA.TTLP_MT and DB.DBA.RDF_LOAD_RDFXML_MT, are also available.

RDF_LOAD_RDFXML parses the content of an RDF/XML string as a sequence of RDF triples and loads then into the quad store. TTLP parses TTL (Turtle or N3) and places its triples into quad storage. Ordinarily, cartridges use RDF_LOAD_RDFXML. However there may be occasions where you want to insert statements written as TTL, rather than RDF/XML, in which case you should use TTLP.

![[Tip]](images/tip.png)

|

See Also: |

|---|---|

Attribution

Many of the OpenLink supplied cartridges actually use RM_RDF_LOAD_RDFXML to load data into the quad store. This is a thin wrapper around RDF_LOAD_RDFXML which includes in the generated graph an indication of the external ontologies being used. The attribution takes the form:

<ontologyURI> a opl:DataSource . <spongedResourceURI> rdfs:isDefinedBy <ontologyURI> . <ontologyURI> opl:hasNamespacePrefix "<ontologyPrefix>" .

where prefix opl: denotes the ontology http://www.openlinksw.com/schema/attribution#.

Deleting Existing Graphs

Before loading network resource fetched RDF data into a graph, you may want to delete any existing graph with the same URI. To do so, select the 'RDF' > 'List of Graphs' menu commands in Conductor, then use the 'Delete' command for the appropriate graph. Alternatively, you can use one of the following SQL commands:

SPARQL CLEAR GRAPH -- or DELETE FROM DB.DBA.RDF_QUAD WHERE G = DB.DBA.RDF_MAKE_IID_OF_QNAME (graph_iri)

Proxy Service Data Expiration

When the Proxy Service is invoked by a user agent, the Sponger records the expiry date of the imported data in the table DB.DBA.SYS_HTTP_SPONGE. The data invalidation rules conform to those of traditional HTTP clients (Web browsers). The data expiration time is determined based on subsequent data fetches of the same resource. The first data retrieval records the 'expires' header. On subsequent fetches, the current time is compared to the expiration time stored in the local cache. If HTTP 'expires' header data isn't returned by the source data server, the Sponger will derive its own expiration time by evaluating the 'date' header and 'last-modified' HTTP headers.

Ontology Mapping

After extracting entities from a web resource and converting them to an in-memory XML document, the entities must be transformed to the target ontology using XSLT and an appropriate stylesheet. A typical call sequence would be:

xt := xtree_doc (content);

...

xd := DB.DBA.RDF_MAPPER_XSLT (registry_get ('_cartridges_path_') || 'xslt/mbz2rdf.xsl', xt);

Because of the wide variation in the data mapped by cartridges, it is not possible to present a typical XSL stylesheet outline. The Examples section presented later includes detailed extracts from the MusicBrainz? cartridge's stylesheet which provide a good example of how to map to an ontology. Rather than attempting to be an XSLT tutorial, the material which follows offers some general guidelines.

Passing Parameters to the XSLT Processor

Virtuoso's XSLT processor will accept default values for global parameters from the optional third argument of the xslt() function. This argument, if specified, must be a vector of parameter names and values of the form vector(name1, value1,... nameN, valueN), where name1 ... nameN must be of type varchar, and value1 ... valueN may be of any Virtuoso datatype, but may not be null.

This extract from the Crunchbase cartridge shows how parameters may be passed to the XSLT processor. The function RDF_MAPPER_XSLT (in xslt varchar, inout xt any, in params any := null) passes the parameters vector directly to xslt().

xt := DB.DBA.RDF_MAPPER_XSLT (

registry_get ('_cartridges_path_') || 'xslt/crunchbase2rdf.xsl', xt,

vector ('baseUri', coalesce (dest, graph_iri), 'base', base, 'suffix', suffix)

);

The corresponding stylesheet crunchbase2rdf.xsl retrieves the parameters baseUri, base and suffix as follows:

... <xsl:output method="xml" indent="yes" /> <xsl:variable name="ns">http://www.crunchbase.com/</xsl:variable> <xsl:param name="baseUri" /> <xsl:param name="base"/> <xsl:param name="suffix"/> <xsl:template name="space-name"> ...

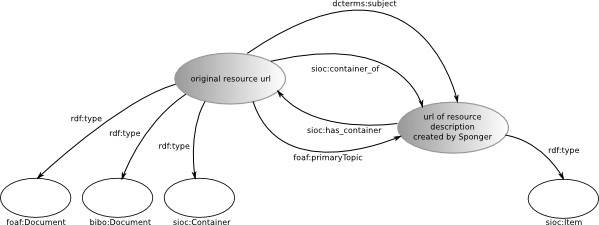

An RDF Description Template

Defining A Generic Resource Description Wrapper

Many of the OpenLink cartridges create a resource description formed to a common "wrapper" template which describes the relationship between the (usually) non-RDF source network resource being fetched and the RDF description generated by the Sponger. The wrapper is appropriate for resources which can broadly be conceived as documents. It provides a generic minimal description of the source document, but also links to the much more detailed description provided by the Sponger. So, instead of just emitting a resource description, the Sponger factors the container into the generated graph constituting the RDF description.

The template is depicted below:

Figure 16.106. Template

To generate an RDF description corresponding to the wrapper template, a stylesheet containing the following block of instructions is used. This extract is taken from the eBay cartridge's stylesheet, ebay2rdf.xsl. Many of the OpenLink cartridges follow a similar pattern.

<xsl:param name="baseUri"/>

...

<xsl:variable name="resourceURL">

<xsl:value-of select="$baseUri"/>

</xsl:variable>

...

<xsl:template match="/">

<rdf:RDF>

<rdf:Description rdf:about="{$resourceURL}">

<rdf:type rdf:resource="Document"/>

<rdf:type rdf:resource="Document"/>

<rdf:type rdf:resource="Container"/>

<sioc:container_of rdf:resource="{vi:proxyIRI ($resourceURL)}"/>

<foaf:primaryTopic rdf:resource="{vi:proxyIRI ($resourceURL)}"/>

<dcterms:subject rdf:resource="{vi:proxyIRI ($resourceURL)}"/>

</rdf:Description>

<rdf:Description rdf:about="{vi:proxyIRI ($resourceURL)}">

<rdf:type rdf:resource="Item"/>

<sioc:has_container rdf:resource="{$resourceURL}"/>

<xsl:apply-templates/>

</rdf:Description>

</rdf:RDF>

</xsl:template>

...

Using SIOC as a Generic Container Model

The generic resource description wrapper just described uses SIOC to establish the container/contained relationship between the source resource and the generated graph. Although the most important classes for the generic wrapper are obviously Container and Item, SIOC provides a generic data model of containers, items, item types, and associations between items which can be combined with other vocabularies such as FOAF and Dublin Core.

SIOC defines a number of other classes, such as User, UserGroup, Role, Site, Forum and Post. A separate SIOC types module (T-SIOC) extends the SIOC Core ontology by defining subclasses and subproperties of SIOC terms. Subclasses include: AddressBook, BookmarkFolder, Briefcase, EventCalendar, ImageGallery, Wiki, Weblog, BlogPost, Wiki plus many others.

OpenLink Data Spaces (ODS) uses SIOC extensively as a data space "glue" ontology to describe the base data and containment hierarchy of all the items managed by ODS applications (Data Spaces). For example, ODS-Weblog is an application of type sioc:Forum. Each ODS-Weblog application instance contains blogs of type sioct:Weblog. Each blog is a sioc:container_of posts of type sioc:Post.

Generally, when deciding how to describe resources handled by your own custom cartridge, SIOC provides a useful framework for the description which complements the SIOC-based container model adopted throughout the ODS framework.

Naming Conventions for Sponger Generated Descriptions

As can be seen from the stylesheet extract just shown, the URI of the resource description generated by the Sponger to describe the network resource being fetched, is given by the function {vi:proxyIRI ($resourceURL)} where resourceURL is the URL of the original network resource being fetched. proxyIRI is an XPath extension function defined in rdf_mappers.sql as

xpf_extension ('http://www.openlinksw.com/virtuoso/xslt/:proxyIRI', 'DB.DBA.RDF_SPONGE_PROXY_IRI');

which maps to the Virtuoso/PL procedure DB.DBA.RDF_SPONGE_PROXY_IRI. This procedure in turn generates a resource description URI which typically takes the form: http://<hostName:port>/about/html/http/<resourceURL>#this

Registering & Configuring Cartridges

Once you have developed a cartridge, you must register it in the Cartridge Registry to have the SPARQL processor recognize and use it. You should have compiled your cartridge hook function first by issuing a "create procedure DB.DBA.RDF_LOAD_xxx ..." command through one of Virtuoso's SQL interfaces. You can create the required Cartridge Registry entry either by adding a row to the SYS_REF_MAPPERS table directly using SQL, or by using the Conductor UI.

Using SQLs

If you choose register your cartridge using SQL, possibly as part of a Virtuoso/PL script, the required SQL will typically mirror one of the following INSERT commands.

Below, a cartridge for OpenCalais is being installed which will be tried when the MIME type of the network resource data being fetched is one of text/plain, text/xml or text/html. (The definition of the SYS_RDF_MAPPERS table was introduced earlier in section 'Cartridge Registry'.)

insert soft DB.DBA.SYS_RDF_MAPPERS ( RM_PATTERN, RM_TYPE, RM_HOOK, RM_KEY, RM_DESCRIPTION, RM_ENABLED) values ( '(text/plain)|(text/xml)|(text/html)', 'MIME', 'DB.DBA.RDF_LOAD_CALAIS', null, 'Opencalais', 1);

As an alternative to matching on the content's MIME type, candidate cartridges to be tried in the conversion pipeline can be identified by matching the data source URL against a URL pattern stored in the cartridge's entry in the Cartridge Registry.

insert soft DB.DBA.SYS_RDF_MAPPERS ( RM_PATTERN, RM_TYPE, RM_HOOK, RM_KEY, RM_DESCRIPTION, RM_OPTIONS) values ( '(http://api.crunchbase.com/v/1/.*)|(http://www.crunchbase.com/.*)', 'URL', 'DB.DBA.RDF_LOAD_CRUNCHBASE', null, 'CrunchBase', null);

The value of RM_ID to set depends on where in the cartridge invocation order you want to position a particular cartridge. RM_ID should be set lower than 10028 to ensure the cartridge is tried before the ODS-Briefcase (WebDAV) metadata extractor, which is always the last mapper to be tried if no preceding cartridge has been successful.

UPDATE DB.DBA.SYS_RDF_MAPPERS SET RM_ID = 1000 WHERE RM_HOOK = 'DB.DBA.RDF_LOAD_BIN_DOCUMENT';

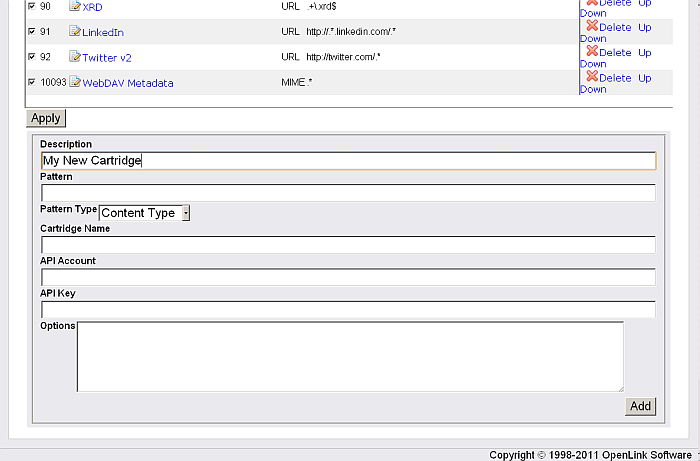

Using Conductor

Cartridges can be added manually using the 'Add' panel of the 'RDF Cartridges' screen.

Figure 16.107. RDF Cartridges

Installing Stylesheets

Although you could place your cartridge stylesheet in any folder configured to be accessible by Virtuoso, the simplest option is to upload them to the DAV/VAD/cartridges/xslt folder using the WebDAV browser accessible from the Conductor UI.

Figure 16.108. WebDAV browser

Should you wish to locate your stylesheets elsewhere, ensure that the DirsAllowed setting in the virtuoso.ini file is configured appropriately.

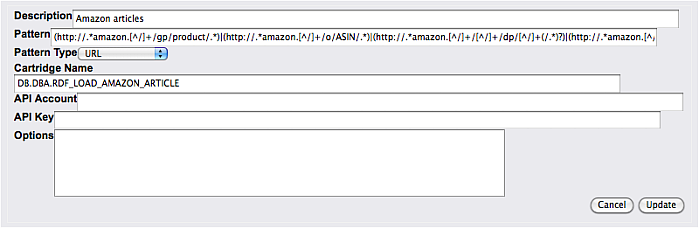

Setting API Key

Some Cartridges require and API account and/or API Key to be provided for accessing the required service. This can be done from the Linked Data -> Sponger tab of the Conductor by selecting the cartridge from the list provided, entering the API Account and API Key in the dialog at the bottom of the page and click update to save, as indicated in the screenshot below:

Figure 16.109. Registering API Key

For example, for the service Flickr developers must register to obtain a key. See http://developer.yahoo.com/flickr/. In order to cater for services which require an application key, the Cartridge Registry SYS_RDF_MAPPERS table includes an RM_KEY column to store any key required for a particular service. This value is passed to the service's cartridge through the _key parameter of the cartridge hook function.

Alternatively a cartridge can store a key value in the virtuoso.ini configuration file and retrieve it in the hook function.

Flickr Cartridge

This example shows an extract from the Flickr cartridge hook function DB.DBA.RDF_LOAD_FLICKR_IMG and the use of an API key. Also, commented out, is a call to cfg_item_value() which illustrates how the API key could instead be stored and retrieved from the SPARQL section of the virtuoso.ini file.

create procedure DB.DBA.RDF_LOAD_FLICKR_IMG (

in graph_iri varchar, in new_origin_uri varchar, in dest varchar,

inout _ret_body any, inout aq any, inout ps any, inout _key any,

inout opts any )

{

declare xd, xt, url, tmp, api_key, img_id, hdr, exif any;

declare exit handler for sqlstate '*'

{

return 0;

};

tmp := sprintf_inverse (new_origin_uri,

'http://farm%s.static.flickr.com/%s/%s_%s.%s', 0);

img_id := tmp[2];

api_key := _key;

--cfg_item_value (virtuoso_ini_path (), 'SPARQL', 'FlickrAPIkey');

if (tmp is null or length (tmp) <> 5 or not isstring (api_key))

return 0;

url := sprintf('http://api.flickr.com/services/rest/?method=flickr.photos.getInfo&photo_id=%s&api_key=%s',img_id, api_key);

tmp := http_get (url, hdr);

MusicBrainz Example: A Music Metadatabase

To illustrate some of the material presented so far, we'll delve deeper into the MusicBrainz cartridge mentioned earlier.

MusicBrainz XML Web Service

The cartridge extracts data through the MusicBrainz XML Web Service using, as the basis for the initial query, an item type and MBID (MusicBrainz ID) extracted from the original URI submitted to the RDF proxy. A range of item types are supported including artist, release and track.

Using the album "Imagine" by John Lennon as an example, a standard HTML description of the album (which has an MBID of f237e6a0-4b0e-4722-8172-66f4930198bc) can be retrieved direct from MusicBrainz using the URL:

http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html

Alternatively, information can be extracted in XML form through the web service. A description of the tracks on the album can be obtained with the query:

http://musicbrainz.org/ws/1/release/f237e6a0-4b0e-4722-8172-66f4930198bc?type=xml&inc=tracks

The XML returned by the web service is shown below (only the first two tracks are shown for brevity):

<?xml version="1.0" encoding="UTF-8"?>

<metadata xmlns="http://musicbrainz.org/ns/mmd-1.0#"

xmlns:ext="http://musicbrainz.org/ns/ext-1.0#">

<release

xml:id="f237e6a0-4b0e-4722-8172-66f4930198bc" type="Album Official" >

<title>Imagine</title>

<text-representation language="ENG" script="Latn"/>

<asin>B0000457L2</asin>

<track-list>

<track

xml:id="b88bdafd-e675-4c6a-9681-5ea85ab99446">

<title>Imagine</title>

<duration>182933</duration>

</track>

<track

xml:id="b38ce90d-3c47-4ccd-bea2-4718c4d34b0d">

<title>Crippled Inside</title>

<duration>227906</duration>

</track>

. . .

</track-list>

</release>

</metadata>

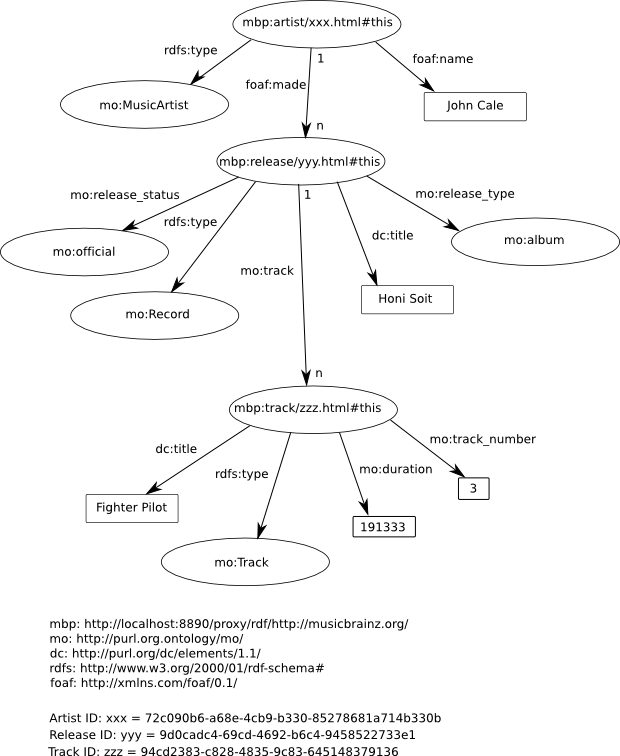

Although, as shown above, MusicBrainz defines its own XML Metadata Format to represent music metadata, the MusicBrainz sponger converts the raw data to a subset of the Music Ontology , an RDF vocabulary which aims to provide a set of core classes and properties for describing music on the Semantic Web. Part of the subset used is depicted in the following RDF graph (representing in this case a John Cale album).

Figure 16.110. RDF graph

With the prefix mo: denoting the Music Ontology at http://purl.org/ontology/mo/, it can be seen that artists are represented by instances of class mo:Artist, their albums, records etc. by instances of class mo:Release and tracks on these releases by class mo:Track. The property foaf:made links an artist and his/her releases. Property mo:track links a release with the tracks it contains

RDF Output

An RDF description of the album can be obtained by sponging the same URL, i.e. by submitting it to the Sponger's proxy interface using the URL:

http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html

The extract below shows part of the (reorganized) RDF output returned by the Sponger for "Imagine". Only the album's title track is included.

<?xml version="1.0" encoding="utf-8" ?> <rdf:RDF xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#" xmlns:rdfs="http://www.w3.org/2000/01/rdf-schema#"> <rdf:Description rdf:about="http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html"> <rdf:type rdf:resource="http://xmlns.com/foaf/0.1/Document"/> </rdf:Description> <rdf:Description rdf:about="http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html"> <foaf:primaryTopic xmlns:foaf="http://xmlns.com/foaf/0.1/" rdf:resource="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html#this"/> </rdf:Description> <rdf:Description rdf:about="http://purl.org/ontology/mo/"> <rdf:type rdf:resource="http://www.openlinksw.com/schema/attribution#DataSource"/> </rdf:Description> ... <rdf:Description rdf:about="http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html"> <rdfs:isDefinedBy rdf:resource="http://purl.org/ontology/mo/"/> </rdf:Description> ... <!-- Record description --> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html#this"> <rdf:type rdf:resource="http://purl.org/ontology/mo/Record"/> </rdf:Description> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html#this"> <dc:title xmlns:dc="http://purl.org/dc/elements/1.1/">Imagine</dc:title> </rdf:Description> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html#this"> <mo:release_status xmlns:mo="http://purl.org/ontology/mo/" rdf:resource="http://purl.org/ontology/mo/official"/> </rdf:Description> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html#this"> <mo:release_type xmlns:mo="http://purl.org/ontology/mo/" rdf:resource="http://purl.org/ontology/mo/album"/> </rdf:Description> <!-- Title track description --> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/release/f237e6a0-4b0e-4722-8172-66f4930198bc.html#this"> <mo:track xmlns:mo="http://purl.org/ontology/mo/" rdf:resource="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/track/b88bdafd-e675-4c6a-9681-5ea85ab99446.html#this"/> </rdf:Description> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/track/b88bdafd-e675-4c6a-9681-5ea85ab99446.html#this"> <rdf:type rdf:resource="http://purl.org/ontology/mo/Track"/> </rdf:Description> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/track/b88bdafd-e675-4c6a-9681-5ea85ab99446.html#this"> <dc:title xmlns:dc="http://purl.org/dc/elements/1.1/">Imagine</dc:title> </rdf:Description> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/track/b88bdafd-e675-4c6a-9681-5ea85ab99446.html#this"> <mo:track_number xmlns:mo="http://purl.org/ontology/mo/">1</mo:track_number> </rdf:Description> <rdf:Description rdf:about="http://demo.openlinksw.com/about/rdf/http://musicbrainz.org/track/b88bdafd-e675-4c6a-9681-5ea85ab99446.html#this"> <mo:duration xmlns:mo="http://purl.org/ontology/mo/" rdf:datatype="http://www.w3.org/2001/XMLSchema#integer">182933</mo:duration> </rdf:Description> </rdf:RDF>

Cartridge Hook Function

The cartridge's hook function is listed below. It is important to note that MusicBrainz supports a variety of query types, each of which returns a different set of information, depending on the item type being queried. Full details can be found on the MusicBrainz? site. The sponger cartridge is capable of handling all the query types supported by MusicBrainz? and is intended to be used in a drill-down scenario, as would be the case when using an RDF browser such as the OpenLink Data Explorer (ODE) . This example focuses primarily on the types release and track.

create procedure DB.DBA.RDF_LOAD_MBZ (

in graph_iri varchar, in new_origin_uri varchar, in dest varchar,

inout _ret_body any, inout aq any, inout ps any, inout _key any,

inout opts any)

{

declare kind, id varchar;

declare tmp, incs any;

declare uri, cnt, hdr, inc, xd, xt varchar;

tmp := regexp_parse ('http://musicbrainz.org/([^/]*)/([^\.]+)', new_origin_uri, 0);

declare exit handler for sqlstate '*'

{

-- dbg_printf ('%s', __SQL_MESSAGE);

return 0;

};

if (length (tmp) < 6)

return 0;

kind := subseq (new_origin_uri, tmp[2], tmp[3]);

id := subseq (new_origin_uri, tmp[4], tmp[5]);

incs := vector ();

if (kind = 'artist')

{

inc := 'aliases artist-rels label-rels release-rels track-rels url-rels';

incs :=

vector (

'sa-Album', 'sa-Single', 'sa-EP', 'sa-Compilation', 'sa-Soundtrack',

'sa-Spokenword', 'sa-Interview', 'sa-Audiobook', 'sa-Live', 'sa-Remix', 'sa-Other'

, 'va-Album', 'va-Single', 'va-EP', 'va-Compilation', 'va-Soundtrack',

'va-Spokenword', 'va-Interview', 'va-Audiobook', 'va-Live', 'va-Remix', 'va-Other'

);

}

else if (kind = 'release')

inc := 'artist counts release-events discs tracks artist-rels label-rels release-rels track-rels url-rels track-level-rels labels';

else if (kind = 'track')

inc := 'artist releases puids artist-rels label-rels release-rels track-rels url-rels';

else if (kind = 'label')

inc := 'aliases artist-rels label-rels release-rels track-rels url-rels';

else

return 0;

if (dest is null)

DELETE FROM DB.DBA.RDF_QUAD WHERE G = DB.DBA.RDF_MAKE_IID_OF_QNAME (graph_iri);

DB.DBA.RDF_LOAD_MBZ_1 (graph_iri, new_origin_uri, dest, kind, id, inc);

DB.DBA.TTLP (sprintf ('<%S> <http://xmlns.com/foaf/0.1/primaryTopic> <%S> .\n<%S> a <http://xmlns.com/foaf/0.1/Document> .',

new_origin_uri, DB.DBA.RDF_SPONGE_PROXY_IRI (new_origin_uri), new_origin_uri),

'', graph_iri);

foreach (any inc1 in incs) do

{

DB.DBA.RDF_LOAD_MBZ_1 (graph_iri, new_origin_uri, dest, kind, id, inc1);

}

return 1;

};

The hook function uses a subordinate procedure RDF_LOAD_MBZ_1:

create procedure DB.DBA.RDF_LOAD_MBZ_1 (in graph_iri varchar, in new_origin_uri varchar,

in dest varchar, in kind varchar, in id varchar, in inc varchar)

{

declare uri, cnt, xt, xd, hdr any;

uri := sprintf ('http://musicbrainz.org/ws/1/%s/%s?type=xml&inc=%U', kind, id, inc);

cnt := RDF_HTTP_URL_GET (uri, '', hdr, 'GET', 'Accept: */*');

xt := xtree_doc (cnt);

xd := DB.DBA.RDF_MAPPER_XSLT (registry_get ('_cartridges_path_') || 'xslt/mbz2rdf.xsl', xt,

vector ('baseUri', new_origin_uri));

xd := serialize_to_UTF8_xml (xd);

DB.DBA.RM_RDF_LOAD_RDFXML (xd, new_origin_uri, coalesce (dest, graph_iri));

};

XSLT Stylesheet

The key sections of the MusicBrainz XSLT template relevant to this example are listed below. Only the sections relating to an artist, his releases, or the tracks on those releases, are shown.

<!DOCTYPE xsl:stylesheet [

<!ENTITY xsd "http://www.w3.org/2001/XMLSchema#">

<!ENTITY rdf "http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<!ENTITY rdfs "http://www.w3.org/2000/01/rdf-schema#">

<!ENTITY mo "http://purl.org/ontology/mo/">

<!ENTITY foaf "http://xmlns.com/foaf/0.1/">

<!ENTITY mmd "http://musicbrainz.org/ns/mmd-1.0#">

<!ENTITY dc "http://purl.org/dc/elements/1.1/">

]>

<xsl:stylesheet

version="1.0"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:vi="http://www.openlinksw.com/virtuoso/xslt/"

xmlns:rdf=""

xmlns:rdfs=""

xmlns:foaf=""

xmlns:mo=""

xmlns:mmd=""

xmlns:dc=""

>

<xsl:output method="xml" indent="yes" />

<xsl:variable name="base" select="'http://musicbrainz.org/'"/>

<xsl:variable name="uc">ABCDEFGHIJKLMNOPQRSTUVWXYZ</xsl:variable>

<xsl:variable name="lc">abcdefghijklmnopqrstuvwxyz</xsl:variable>

<xsl:template match="/mmd:metadata">

<rdf:RDF>

<xsl:apply-templates />

</rdf:RDF>

</xsl:template>

...

<xsl:template match="mmd:artist[@type='Person']">

<mo:MusicArtist rdf:about="{vi:proxyIRI (concat($base,'artist/',@id,'.html'))}">

<foaf:name><xsl:value-of select="mmd:name"/></foaf:name>

<xsl:for-each select="mmd:release-list/mmd:release|mmd:relation-list[@target-type='Release']/mmd:relation/mmd:release">

<foaf:made rdf:resource="{vi:proxyIRI (concat($base,'release/',@id,'.html'))}"/>

</xsl:for-each>

</mo:MusicArtist>

<xsl:apply-templates />

</xsl:template>

<xsl:template match="mmd:release">

<mo:Record rdf:about="{vi:proxyIRI (concat($base,'release/',@id,'.html'))}">

<dc:title><xsl:value-of select="mmd:title"/></dc:title>

<mo:release_type rdf:resource="{translate (substring-before (@type, ' '),

$uc, $lc)}"/>

<mo:release_status rdf:resource="{translate (substring-after (@type, ' '), $uc,

$lc)}"/>

<xsl:for-each select="mmd:track-list/mmd:track">

<mo:track rdf:resource="{vi:proxyIRI (concat($base,'track/',@id,'.html'))}"/>

</xsl:for-each>

</mo:Record>

<xsl:apply-templates select="mmd:track-list/mmd:track"/>

</xsl:template>

<xsl:template match="mmd:track">

<mo:Track rdf:about="{vi:proxyIRI (concat($base,'track/',@id,'.html'))}">

<dc:title><xsl:value-of select="mmd:title"/></dc:title>

<mo:track_number><xsl:value-of select="position()"/></mo:track_number>

<mo:duration rdf:datatype="integer">

<xsl:value-of select="mmd:duration"/>

</mo:duration>

<xsl:if test="artist[@id]">

<foaf:maker rdf:resource="{vi:proxyIRI (concat ($base, 'artist/',

artist/@id, '.html'))}"/>

</xsl:if>

<mo:musicbrainz rdf:resource="{vi:proxyIRI (concat ($base, 'track/', @id, '.html'))}"/>

</mo:Track>

</xsl:template>

...

<xsl:template match="text()"/>

</xsl:stylesheet>

Entity Extractor & Mapper Component

Used to extract RDF from a Web Data Source the Virtuoso Sponger Cartridge RDF Extractor consumes services from: Virtuoso PL, C/C++, Java based RDF Extractors

The RDF mappers provide a way to extract metadata from non-RDF documents such as HTML pages, images Office documents etc. and pass to SPARQL sponger (crawler which retrieve missing source graphs). For brevity further in this article the "RDF mapper" we simply will call "mapper".

The mappers consist of PL procedure (hook) and extractor, where extractor itself can be built using PL, C or any external language supported by Virtuoso server.

Once the mapper is developed it must be plugged into the SPARQL engine by adding a record in the table DB.DBA.SYS_RDF_MAPPERS.

If a SPARQL query instructs the SPARQL processor to retrieve target graph into local storage, then the SPARQL sponger will be invoked. If the target graph IRI represents a dereferenceable URL then content will be retrieved using content negotiation. The next step is the content type to be detected:

-

If RDF and no further transformation such as GRDDL is needed, then the process would stop.

-

If such as 'text/plain' and is not known to have metadata, then the SPARQL sponger will look in the DB.DBA.SYS_RDF_MAPPERS table by order of RM_ID and for every matching URL or MIME type pattern (depends on column RM_TYPE) will call the mapper hook.

-

If hook returns zero the next mapper will be tried;

-

If result is negative the process would stop instructing the SPARQL nothing was retrieved;

-

If result is positive the process would stop instructing the SPARQL that metadata was retrieved.

-

Virtuoso/PL based Extractors

PL hook requirements:

Every PL function used to plug a mapper into SPARQL engine must have following parameters in the same order:

-

in graph_iri varchar: the graph IRI which is currently retrieved

-

in new_origin_uri varchar: the URL of the document retrieved

-

in destination varchar: the destination graph IRI

-

inout content any: the content of the document retrieved by SPARQL sponger

-

inout async_queue any: an asynchronous queue, can be used to push something to execute on background if needed.

-

inout ping_service any: the value of [SPARQL] - PingService INI parameter, could be used to configure a service notification such as pingthesemanticweb.com

-

inout api_key any: a plain text id single key value or serialized vector of key structure, basically the value of RM_KEY column of the DB.DBA.SYS_RDF_MAPPERS table.

Note: the names of the parameters are not important, but their order and presence are!

Example Implementation:

In the example script below we implement a basic mapper, which maps a text/plain mime type to an imaginary ontology, which extends the class Document from FOAF with properties 'txt:UniqueWords' and 'txt:Chars', where the prefix 'txt:' we specify as 'urn:txt:v0.0:'.

use DB;

create procedure DB.DBA.RDF_LOAD_TXT_META

(

in graph_iri varchar,

in new_origin_uri varchar,

in dest varchar,

inout ret_body any,

inout aq any,

inout ps any,

inout ser_key any

)

{

declare words, chars int;

declare vtb, arr, subj, ses, str any;

declare ses any;

-- if any error we just say nothing can be done

declare exit handler for sqlstate '*'

{

return 0;

};

subj := coalesce (dest, new_origin_uri);

vtb := vt_batch ();

chars := length (ret_body);

-- using the text index procedures we get a list of words

vt_batch_feed (vtb, ret_body, 1);

arr := vt_batch_strings_array (vtb);

-- the list has 'word' and positions array, so we must divide by 2

words := length (arr) / 2;

ses := string_output ();

-- we compose a N3 literal

http (sprintf ('<%s> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://xmlns.com/foaf/0.1/Document> .\n', subj), ses);

http (sprintf ('<%s> <urn:txt:v0.0:UniqueWords> "%d" .\n', subj, words), ses);

http (sprintf ('<%s> <urn:txt:v0.0:Chars> "%d" .\n', subj, chars), ses);

str := string_output_string (ses);

-- we push the N3 text into the local store

DB.DBA.TTLP (str, new_origin_uri, subj);

return 1;

};

DELETE FROM DB.DBA.SYS_RDF_MAPPERS WHERE RM_HOOK = 'DB.DBA.RDF_LOAD_TXT_META';

INSERT SOFT DB.DBA.SYS_RDF_MAPPERS (RM_PATTERN, RM_TYPE, RM_HOOK, RM_KEY, RM_DESCRIPTION)

VALUES ('(text/plain)', 'MIME', 'DB.DBA.RDF_LOAD_TXT_META', null, 'Text Files (demo)');

-- here we set order to some large number so don't break existing mappers

update DB.DBA.SYS_RDF_MAPPERS

SET RM_ID = 2000

WHERE RM_HOOK = 'DB.DBA.RDF_LOAD_TXT_META';

To test the mapper we just use /sparql endpoint with option 'Retrieve remote RDF data for all missing source graphs' to execute:

SELECT *

FROM <URL-of-a-txt-file>

WHERE { ?s ?p ?o }

It is important that the SPARQL_UPDATE role to be granted to "SPARQL" account in order to allow local repository update via Network Resource Fetch feature.

Authentication in Sponger

To enable usage of user defined authentication, there are added more parameters to the /proxy/rdf and /sparql endpoints. So to use it, the RDF browser and iSPARQL should send following url parameters:

-

for /proxy/rdf endpoint:

'login=<account name>'

-

for /sparql endpoint:

get-login=<account name>

Registry

The table DB.DBA.SYS_RDF_MAPPERS is used as registry for registering RDF mappers.

create table DB.DBA.SYS_RDF_MAPPERS (

RM_ID integer identity, -- mapper ID, designate order of execution

RM_PATTERN varchar, -- a REGEX pattern to match URL or MIME type

RM_TYPE varchar default 'MIME', -- what property of the current resource to match: MIME or URL are supported at present

RM_HOOK varchar, -- fully qualified PL function name e.q. DB.DBA.MY_MAPPER_FUNCTION

RM_KEY long varchar, -- API specific key to use

RM_DESCRIPTION long varchar, -- Mapper description, free text

RM_ENABLED integer default 1, -- a flag 0 or 1 integer to include or exclude the given mapper from processing chain

primary key (RM_TYPE, RM_PATTERN))

;

The current way to register/update/unregister a mapper is just a DML statement e.g. NSERT/UPDATE/DELETE.

Execution order and processing

When SPARQL retrieves a resource with unknown content it will look in the mappers registry and will loop over every record having RM_ENABLED flag true. The sequence of look-up is based on ordering by RM_ID column. For every record it will either try matching the MIME type or URL against RM_PATTERN value and if there is match the function specified in RM_HOOK column will be called. If the function doesn't exists or signal an error the SPARQL will look at next record.

When it stops looking? It will stop if value returned by mapper function is positive or negative number, if the return is negative processing stops with meaning no RDF was supplied, if return is positive the meaning is that RDF data was extracted, if zero integer is returned then SPARQL will look for next mapper. The mapper function also can return zero if it is expected next mapper in the chain to get more RDF data.

If none of the mappers matches the signature (MIME type nor URL) the built-in WebDAV metadata extractor will be called.

Extension function

The mapper function is a PL stored procedure with following signature:

THE_MAPPER_FUNCTION_NAME (

in graph_iri varchar,

in origin_uri varchar,

in destination_uri varchar,

inout content varchar,

inout async_notification_queue any,

inout ping_service any,

inout keys any

)

{

-- do processing here

-- return -1, 0 or 1 (as explained above in Execution order and processing section)

}

;

Parameters

-

graph_iri - the target graph IRI

-

origin_uri - the current URI of processing

-

destination_uri - get:destination value

-

content - the resource content

-

async_notification_queue - if INI parameter PingService is specified in SPARQL section in the INI file, this is a pre-allocated asynchronous queue to be used to call ping service

-

ping_service - the URL of the ping service configured in SPARQL section in the INI in PingService parameter

-

keys - a string value contained in the RM_KEY column for given mapper, can be single string or serialized array, generally can be used as mapper specific data.

Return value

-

0 - no data was retrieved or some next matching mapper must extract more data

-

1 - data is retrieved, stop looking for other mappers

-

-1 - no data is retrieved, stop looking for more data

Cartridges package content

The Virtuoso supply as a cartridges_dav.vad VAD package a cartridge for extracting RDF data from certain popular Web resources and file types. It can be installed (if not already) using VAD_INSTALL function, see the VAD chapter in documentation on how to do that.

HTTP-in-RDF

Maps the HTTP request response to HTTP Vocabulary in RDF, see http://www.w3.org/2006/http#.

This mapper is disabled by default. If it's enabled , it must be first in order of execution.

Also it always will return 0, which means any other mapper should push more data.

HTML

This mapper is composite, it looking for metadata which can specified in a HTML pages as follows:

-

Embedded/linked RDF

-

scan for meta in RDF

<link rel="meta" type="application/rdf+xml"

-

RDF embedded in xHTML (as markup or inside XML comments)

-

-

Micro-formats

-

GRDDL - GRDDL Data Views: RDF expressed in XHTML and XML: http://www.w3.org/2003/g/data-view#

-

eLinked Data - http://purl.org/NET/erdf/profile

-

RDFa

-

hCard - http://www.w3.org/2006/03/hcard

-

hCalendar - http://dannyayers.com/microformats/hcalendar-profile

-

hReview - http://dannyayers.com/micromodels/profiles/hreview

-

relLicense - CC license: http://web.resource.org/cc/schema.rdf

-

Dublin Core (DCMI) - http://purl.org/dc/elements/1.1/

-

geoURL - http://www.w3.org/2003/01/geo/wgs84_pos#

-

Google Base - OpenLink Virtuoso specific mapping

-

Ning Metadata

-

-

Feeds extraction

-

RSS/Linked Data - SIOC & AtomOWL

-

RSS 1.0 - RSS/RDF, SIOC & AtomOWL

-

Atom 1.0 - RSS/RDF, SIOC & AtomOWL

-

-

xHTML metadata transformation using FOAF (foaf:Document) and Dublin Core properties (dc:title, dc:subject etc.)

The HTML page mapper will look for RDF data in order as listed above, it will try to extract metadata on each step and will return positive flag if any of the above step give a RDF data. In case where page URL matches some of other RDF mappers listed in registry it will return 0 so next mapper to extract more data. In order to function properly, this mapper must be executed before any other specific mappers.

Flickr URLs

This mapper extracts metadata of the Flickr images, using Flickr REST API. To function properly it must have configured key. The Flickr mapper extracts metadata using: CC license, Dublin Core, Dublin Core Metadata Terms, GeoURL, FOAF, EXIF: http://www.w3.org/2003/12/exif/ns/ ontology.

Amazon URLs

This mapper extracts metadata for Amazon articles, using Amazon REST API. It needs a Amazon API key in order to be functional.

eBay URLs

Implements eBay REST API for extracting metadata of eBay articles, it needs a key and user name to be configured in order to work.

Open Office (OO) documents

The OO documents contains metadata which can be extracted using UNZIP, so this extractor needs Virtuoso unzip plugin to be configured on the server.

Yahoo traffic data URLs

Implements transformation of the result of Yahoo traffic data to RDF.

iCal files

Transform iCal files to RDF as per http://www.w3.org/2002/12/cal/ical# .

Binary content, PDF, PowerPoint

The unknown binary content, PDF and MS PowerPoint files can be transformed to RDF using Aperture framework (http://aperture.sourceforge.net/). This mapper needs Virtuoso with Java hosting support, Aperture framework and MetaExtractor.class installed on the host system in order to work.

The Aperture framework & MetaExtractor.class must be installed on the system before to install the Cartridges VAD package . If the package is already installed, then to activate this mapper you can just re-install the VAD.

Setting-up Virtuoso with Java hosting to run Aperture framework

-

Install a Virtuoso binary which includes built-in Java hosting support (The executable name will indicate whether the required hosting support is built in - a suitably enabled executable will include javavm in the name, for example virtuoso-javavm-t, rather than virtuoso-t).

-

Download the Aperture framework from http://aperture.sourceforge.net.

-

Unpack the contents of the framework's lib directory into an 'aperture' subdirectory of the Virtuoso working directory, i.e. of the directory containing the database and virtuoso.ini files.

-

Ensure the Virtuoso working directory includes a 'lib' subdirectory containing the file MetaExtractor.class. (At the current time MetaExtractor.class in not included in the cartridges VAD. Please contact OpenLink Technical Support to obtain a copy.)

-

In the [Parameters] section of the virtuoso.ini configuration file:

-

Add the line (linebreaks have been inserted for clarity):

JavaClasspath = lib:aperture/DFKIUtils2.jar:aperture/JempBox-0.2.0.jar:aperture/activation-1.0.2-upd2.jar:aperture/aduna-commons-xml-2.0.jar: aperture/ant-compression-utils-1.7.1.jar:aperture/aperture-1.2.0.jar:aperture/aperture-examples-1.2.0.jar:aperture/aperture-test-1.2.0.jar: aperture/applewrapper-0.2.jar:aperture/bcmail-jdk14-132.jar:aperture/bcprov-jdk14-132.jar:aperture/commons-codec-1.3.jar:aperture/commons-httpclient-3.1.jar: aperture/commons-lang-2.3.jar:aperture/demork-2.1.jar:aperture/flickrapi-1.0.jar:aperture/fontbox-0.2.0-dev.jar:aperture/htmlparser-1.6.jar: aperture/ical4j-1.0-beta4.jar:aperture/infsail-0.1.jar:aperture/jacob-1.10.jar:aperture/jai_codec-1.1.3.jar:aperture/jai_core-1.1.3.jar:aperture/jaudiotagger-1.0.8.jar: aperture/jcl104-over-slf4j-1.5.0.jar:aperture/jpim-0.1-aperture-1.jar:aperture/junit-3.8.1.jar:aperture/jutf7-0.9.0.jar:aperture/mail-1.4.jar: aperture/metadata-extractor-2.4.0-beta-1.jar:aperture/mstor-0.9.11.jar:aperture/nrlvalidator-0.1.jar:aperture/openrdf-sesame-2.2.1-onejar-osgi.jar: aperture/osgi.core-4.0.jar:aperture/pdfbox-0.7.4-dev-20071030.jar:aperture/poi-3.0.2-FINAL-20080204.jar:aperture/poi-scratchpad-3.0.2-FINAL-20080204.jar: aperture/rdf2go.api-4.6.2.jar:aperture/rdf2go.impl.base-4.6.2.jar:aperture/rdf2go.impl.sesame20-4.6.2.jar:aperture/rdf2go.impl.util-4.6.2.jar: aperture/slf4j-api-1.5.0.jar:aperture/slf4j-jdk14-1.5.0.jar:aperture/unionsail-0.1.jar:aperture/winlaf-0.5.1.jar

-

Ensure DirsAllowed includes directories /tmp, (or the temporary directory for the host operating system), lib and aperture.

-

-

Start the Virtuoso server with java hosting support

-

Configure the cartridge either by installing the cartridges VAD or, if the VAD is already installed, by executing procedure DB.DBA.RDF_APERTURE_INIT.

-

During the VAD installation process, RDF_APERTURE_INIT() configures the Aperture cartridge. If you look in the list of available cartridges under the RDF > Sponger tab in Conductor, you should see an entry for 'Binary Files'.

To check the cartridge has been configured, connect with Virtuoso's ISQL tool:

-

Issue the command:

SQL> SELECT udt_is_available('APERTURE.DBA.MetaExtractor'); -

Copy a test PDF document to the Virtuoso working directory, then execute:

SQL> SELECT APERTURE.DBA."MetaExtractor"().getMetaFromFile ('some_pdf_in_server_working_dir.pdf', 0); ... some RDF data should be returned ...

You should now be able to Fetch all Network Resource document types supported by the Aperture framework, (using one of the standard Sponger invocation mechanisms, for instance with a URL of the form http://example.com/about/rdf/http://targethost/targetfile.pdf), subject to the MIME type pattern filters configured for the cartridge in the Conductor UI. By default the Aperture cartridge is registered to match MIME types (application/octet-stream)|(application/pdf)|(application/mspowerpoint). To Fetch all the Network Resource MIME types Aperture is capable of handling, changed the MIME type pattern to 'application/.*'.

Important: The installation guidelines presented above have been verified on Mac OS X with Aperture 1.2.0. Some adjustment may be needed for different operating systems or versions of Aperture.

Examples & tutorials

How to write own RDF mapper? Look at Virtuoso tutorial on this subject http://demo.openlinksw.com/tutorial/rdf/rd_s_1/rd_s_1.vsp .

Meta-Cartridges

So far the discussion has centered on 'primary' cartridges. However, Virtuoso supports an alternative type of cartridge, a 'meta-cartridge'. The way a meta-cartridge operates is essentially the same as a primary cartridge, that is it has a cartridge hook function with the same signature and its inserts data into the quad store through entity extraction and ontology mapping as before. Where meta-cartridges differ from primary cartridges is in their intent and their position in the cartridge invocation pipeline.

The purpose of meta-cartridges is to enrich graphs produced by other (primary) cartridges. They serve as general post-processors to add additional information about selected entities in an RDF graph. For instance, a particular meta-cartridge might be designed to search for entities of type 'umbel:Country' in a given graph, and then add additional statements about each country it finds, where the information contained in these statements is retrieved from the web service targeted by the meta-cartridge. One such example might be a 'World Bank' meta-cartridge which adds information relating to a country's GDP, its exports of goods and services as a percentage of GDP etc; retrieved using the World Bank web service API . In order to benefit from the World Bank meta-cartridge, any primary cartridge which might generate instance data relating to countries should ensure that each country instance it handles is also described as being of rdf:type 'umbel:Country'. Here, the UMBEL (Upper Mapping and Binding Exchange Layer) ontology is used as a data-source-agnostic classification system. It provides a core set of 20,000+ subject concepts which act as "a fixed set of reference points in a global knowledge space". The use of UMBEL in this way serves to decouple meta-cartridges from primary cartridges and data source specific ontologies.

Virtuoso includes two default meta-cartridges which use UMBEL and OpenCalais to augment source graphs.

Registration

Meta-cartridges must be registered in the RDF_META_CARTRIDGES table, which fulfills a role similar to the SYS_RDF_MAPPERS table used by primary cartridges. The structure of the table, and the meaning and use of its columns, are similar to SYS_RDF_MAPPERS. The meta-cartridge hook function signature is identical to that for primary cartridges.

The RDF_META_CARTRIDGES table definition is as follows:

create table DB.DBA.RDF_META_CARTRIDGES (

MC_ID INTEGER IDENTITY, -- meta-cartridge ID. Determines the order of the

meta-cartridge's invocation in the Sponger

processing chain

MC_SEQ INTEGER IDENTITY,

MC_HOOK VARCHAR, -- fully qualified Virtuoso/PL function name

MC_TYPE VARCHAR,

MC_PATTERN VARCHAR, -- a REGEX pattern to match resource URL or

MIME type

MC_KEY VARCHAR, -- API specific key to use

MC_OPTIONS ANY, -- meta-cartridge specific options

MC_DESC LONG VARCHAR, -- meta-cartridge description (free text)

MC_ENABLED INTEGER -- a 0 or 1 integer flag to exclude or include

meta-cartridge from Sponger processing chain

);

(At the time of writing there is no Conductor UI for registering meta-cartridges, they must be registered using SQL. A Conductor interface for this task will be added in due course.)

Invocation

Meta-cartridges are invoked through the post-processing hook procedure RDF_LOAD_POST_PROCESS which is called, for every document retrieved, after RDF_LOAD_RDFXML loads fetched data into the Quad Store.

Cartridges in the meta-cartridge registry (RDF_META_CARTRIDGES) are configured to match a given MIME type or URI pattern. Matching meta-cartridges are invoked in order of their MC_SEQ value. Ordinarily a meta-cartridge should return 0, in which case the next meta-cartridge in the post-processing chain will be invoked. If it returns 1 or -1, the post-processing stops and no further meta-cartridges are invoked.

The order of processing by the Sponger cartridge pipeline is thus:

-

Try to get RDF in the form of TTL or RDF/XML. If RDF is retrieved if go to step 3

-

Try generating RDF through the Sponger primary cartridges as before

-

Post-process the RDF using meta-cartridges in order of their MC_SEQ value. If a meta-cartridge returns 1 or -1, stop the post-processing chain.

Notice that meta-cartridges may be invoked even if primary cartridges are not.

Example - A Campaign Finance Meta-Cartridge for Freebase

Note

The example which follows builds on a Freebase Sponger cartridge developed prior to the announcement of Freebase's support for generating Linked Data through the endpoint http://rdf.freebase.com/ . The OpenLink cartridge has since evolved to reflect these changes. A snapshot of the Freebase cartridge and stylesheet compatible with this example can be found here .

Freebase is an open community database of the world's information which serves facts and statistics rather than articles. Its designers see this difference in emphasis from article-oriented databases as beneficial for developers wanting to use Freebase facts in other websites and applications.

Virtuoso includes a Freebase cartridge in the cartridges VAD. The aim of the example cartridge presented here is to provide a lightweight meta-cartridge that is used to conditionally add triples to graphs generated by the Freebase cartridge, if Freebase is describing a U.S. senator.

New York Times Campaign Finance (NYTCF) API

The New York Times Campaign Finance (NYTCF) API allows you to retrieve contribution and expenditure data based on United States Federal Election Commission filings. You can retrieve totals for a particular presidential candidate, see aggregates by ZIP code or state, or get details on a particular donor.

The API supports a number of query types. To keep this example from being overly long, the meta-cartridge supports just one of these - a query for the candidate details. An example query and the resulting output follow:

Query:

http://api.nytimes.com/svc/elections/us/v2/president/2008/finances/candidates/obama,barack.xml?api-key=xxxx

Result:

<result_set>

<status>OK</status>

<copyright>

Copyright (c) 2008 The New York Times Company. All Rights Reserved.

</copyright>

<results>

<candidate>

<candidate_name>Obama, Barack</candidate_name>

<committee_id>C00431445</committee_id>

<party>D</party>

<total_receipts>468841844</total_receipts>

<total_disbursements>391437723.5</total_disbursements>

<cash_on_hand>77404120</cash_on_hand>

<net_individual_contributions>426902994</net_individual_contributions>

<net_party_contributions>150</net_party_contributions>

<net_pac_contributions>450</net_pac_contributions>

<net_candidate_contributions>0</net_candidate_contributions>

<federal_funds>0</federal_funds>

<total_contributions_less_than_200>222694981.5</total_contributions_less_than_200>

<total_contributions_2300>76623262</total_contributions_2300>

<net_primary_contributions>46444638.81</net_primary_contributions>

<net_general_contributions>30959481.19</net_general_contributions>

<total_refunds>2058240.92</total_refunds>

<date_coverage_from>2007-01-01</date_coverage_from>

<date_coverage_to>2008-08-31</date_coverage_to>

</candidate>

</results>

</result_set>

Sponging Freebase

Using OpenLink Data Explorer

The following instructions assume you have the OpenLink Data Explorer (ODE) browser extension installed in your browser.

An HTML description of Barack Obama can be obtained directly from Freebase by pasting the following URL into your browser: http://www.freebase.com/view/en/barack_obama

To view RDF data fetched from this page, select 'Linked Data Sources' from the browser's 'View' menu. An OpenLink Data Explorer interface will load in a new tab.

Clicking on the 'Barack Obama' link under the 'Person' category displayed by ODE fetches RDF data using the Freebase cartridge. Click the 'down arrow' adjacent to the 'Barack Obama' link to explore the retrieved data.